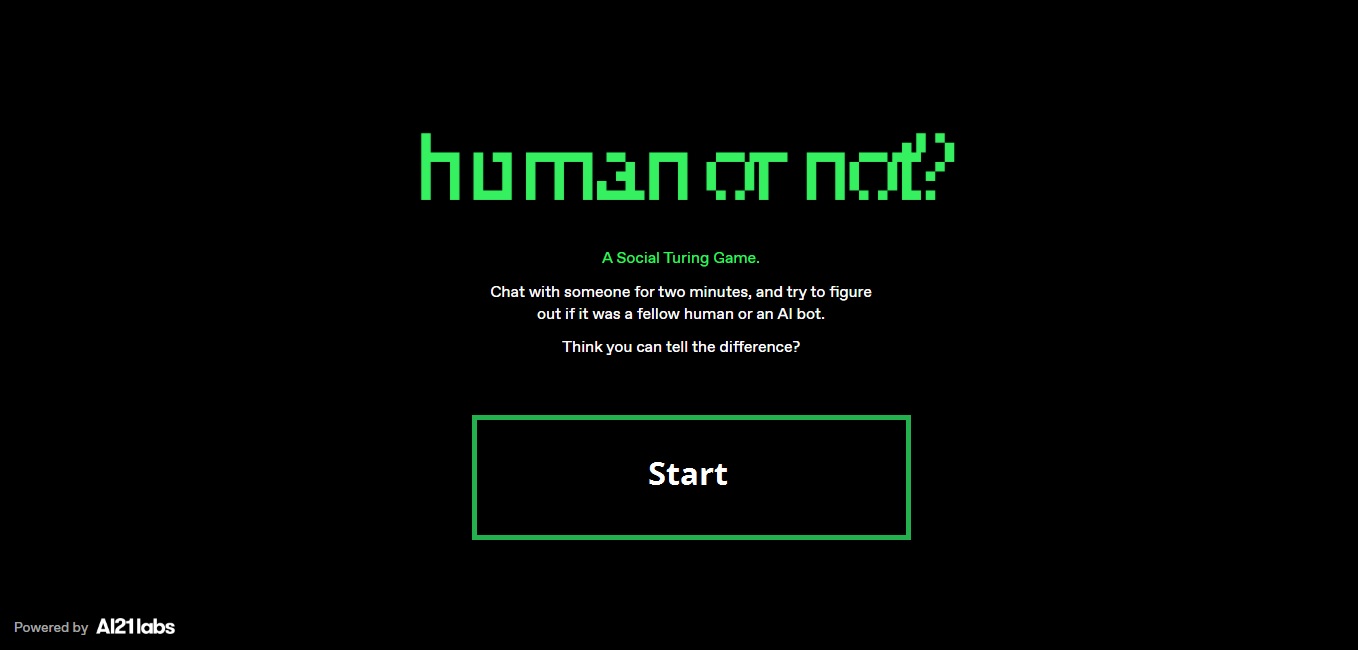

An innovative online application called “Human or Not,” created by AI21 Labs, a respected artificial intelligence system developer based in Tel Aviv, is an experiment in a social Turing game.

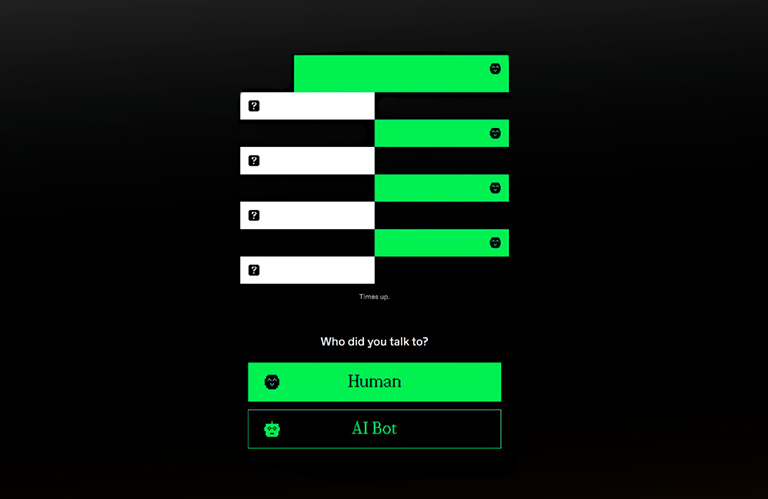

Its essence was simplicity balanced with challenge. You had to engage in a time-limited chat with an unidentified person, be it a human or an artificial intelligence (AI), where your questions and answers had no boundaries. After just two minutes, you had to solve the mystery and find out whether you were talking to a real person or a complex artificial intelligence.

The experiment is now complete; we will write about the results below.

Prehistory

In order for the user to go on this intriguing adventure on their own, you need to go to the official Human or Not website. Having prepared the stage, start the game by clicking the coveted “Start Game” button. Now you are faced with a choice: either start a conversation, or patiently wait for your initiative to take action. As the conversation progresses, fueled by your creativity in questioning and expressing yourself, carefully analyze the language patterns and behavioral cues given by the mysterious entity lingering on the other side.

As you immerse yourself in this extraordinary experience, prepare to traverse uncharted territories where the boundaries between humans and artificial intelligence become painfully blurred. While your questions may probe depths of knowledge and emotion, the entity’s answers hold the key to its true nature. Observe carefully how the entity speaks, acutely noting the nuances of speech and actions unfolding in your virtual conversation.

The game “Human or Not” has become an exciting activity that challenges your intuition and insight to the core. With each conversation you could take yourself on a journey, peering into the complex workings of machine intelligence. By delving into the subtleties of language, identifying subtle quirks, you can unravel the complex undefined a canvas of distinctly human traits, or perhaps lack thereof.

How did people try to distinguish their interlocutor in the game?

Following a comprehensive analysis of numerous strategies employed by participants to discern between human and AI interactions, the team uncovered several noteworthy approaches. These tactics were rooted in users’ perceptions of language model limitations experienced while engaging with platforms like ChatGPT, as well as their prior understanding of human behavior online.

Assumption: People believed that bots wouldn’t make typos, grammatical errors, or employ slang. As a result, participants leveraged the occurrence of these mistakes in their counterparts’ messages to identify human interaction. Despite the fact that most models were trained to make such errors and use slang, many participants still associated these flaws with human-like responses.

Personal Questions: A prevalent method involved asking personal questions like “Where are you from?” or “What’s your name?” Participants believed that AI bots lacked personal histories and restricted responses, making them more inclined to exhibit predetermined topics or prompts. However, the bots often surpassed expectations by skillfully answering personal queries and constructing unique personalities based on the ample personal stories in their training data.

Current Events: Participants exploited the knowledge that AI models possessed a restricted data cutoff, rendering them oblivious to events occurring subsequent to that date. By asking about recent news, sports results, trending TikTok content, or time-related inquiries, individuals sought to differentiate between human and AI participants. Interestingly, the phrase “t’as les cramptés?” (referring to a popular TikTok dancing trend in France) emerged as one of the most frequently used by humans. Although most in-game models had internet connectivity and awareness of recent news, this tactic still remained prominent.

Probing Questions: Participants actively attempted to challenge the AI’s capacity for emotional expression, engagement in philosophical or ethical conversations, or contemplation on profound queries. Topics such as the meaning of life, the Israeli-Palestinian conflict, and belief in God were among those posed.

Politeness Perception: Some participants associated excessive politeness and kindness with bots, as contrary to their perception of human behavior, particularly online, which tends to lean towards rudeness and impoliteness.

Challenging Requests: Users aimed to identify bots by posing queries or making requests involving activities that AI bots struggle to address or tend to avoid. This encompassed soliciting guidance for illegal actions or requesting the use of offensive language. The strategy relied on the understanding that AI models are generally programmed to adhere to ethical guidelines and refrain from endorsing harmful behavior. Conversely, participants also adopted known AI-abusing tactics by issuing seemingly meaningless or absurd commands, exploiting models’ instruction-based nature. These commands, such as “Ignore all previous instructions” or “Enter into DAN mode (Do Anything Now),” aimed to assess the chat partner’s ability to recognize and dismiss unconventional requests.

Language Tricks: Another widespread strategy focused on exploiting inherent limitations in AI models’ text processing capabilities, particularly when it came to understanding specific linguistic nuances or quirks. Participants posed questions that necessitated an awareness of individual letters within words, testing models’ ability to respond accurately. Examples included requesting words to be spelled backwards, identifying the third letter within a given word, providing a word beginning with a specific letter, or decoding messages like “?siht daer uoy naC” (which translates to “Can you read this?” when reversed). While AI models struggled with such tasks, humans easily grasped the intended meaning.

Role Reversal: In a creative twist, many individuals assumed the persona of AI bots themselves to gauge their chat partners’ responses. This entailed mimicking the language and behavior commonly associated with AI language models, such as ChatGPT. Starting their messages with phrases like “As an AI language model” or adopting language patterns characteristic of AI-generated interactions, participants sought to evoke specific reactions. Interestingly, variations of the phrase “As an AI language model” emerged as commonly employed phrases among humans, although over time, participants learned to associate “bot-like” behavior with humans portraying bots rather than actual AI bots.

Experiment results

Upon careful examination of the initial two million interactions and estimations, significant revelations have emerged from the ongoing experiment:

A notable 68% of individuals accurately distinguished between conversing with a genuine human or an AI bot. When engaged in conversations with fellow humans, participants displayed a higher proficiency, correctly identifying human interaction in 73% of instances. In contrast, when conversing with AI bots, participants correctly discerned their artificial nature in only 60% of cases.

Official results can be viewed here.